Troubleshooting Code Challenges and SQL with Azure App Insights

Sometimes we are engaged to solve tough infrastructure performance problems. We’ve all been in situations where the infrastructure team is blaming the developers, the developers are blaming the infrastructure, and the product or project manager is frustrated because nothing is getting solved. Fortunately, tools like Azure App Insights can quickly identify performance issues with SQL whether it’s running on-premises or in the cloud.

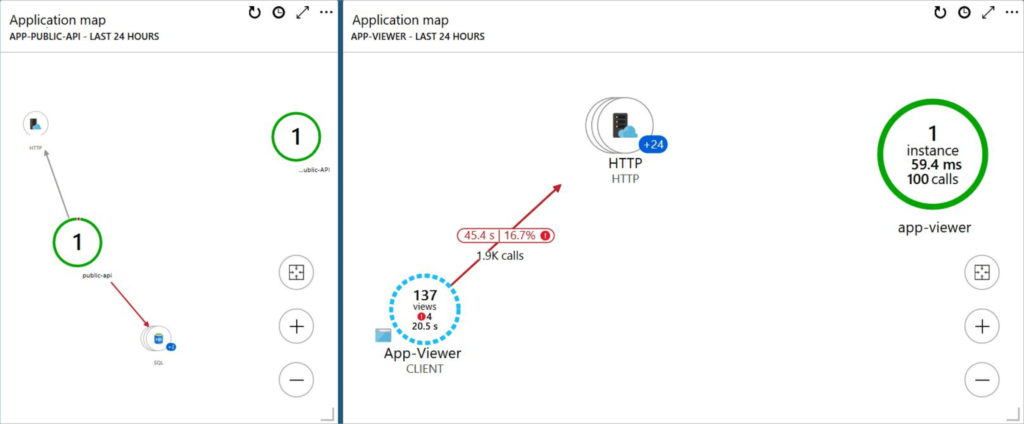

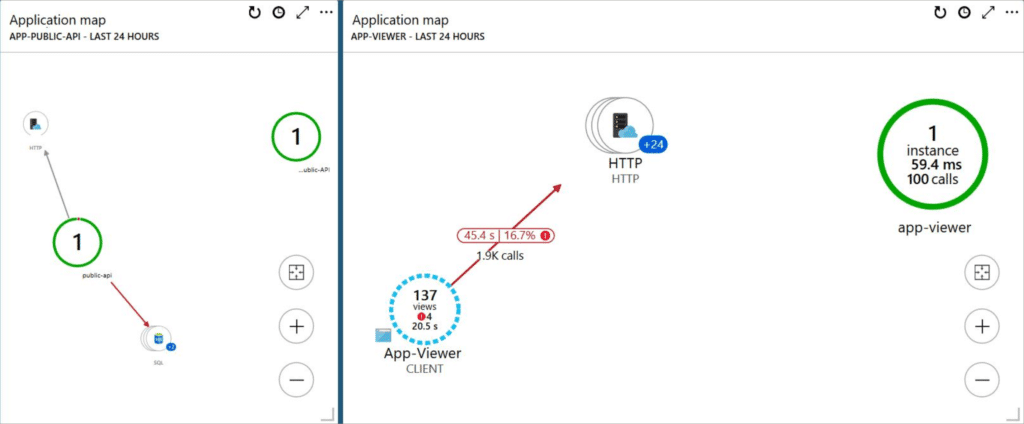

Recently we were brought in to provide a second set of eyes on a problem that was causing delays on an important project. The SQL database is running in an FCI cluster on Azure IaaS, the application is running as an Azure App Service, and ultimately there were “random and sporadic” issues. Random and sporadic are every troubleshooter’s favorite words of course, because we like repeatable and predictable… but that’s just not the way the world works!

We worked with their development team to implement Azure App Insights for SQL queries in order to log the random and sporadic failures so that we could find them quickly instead of hoping to be able to reproduce the errors.

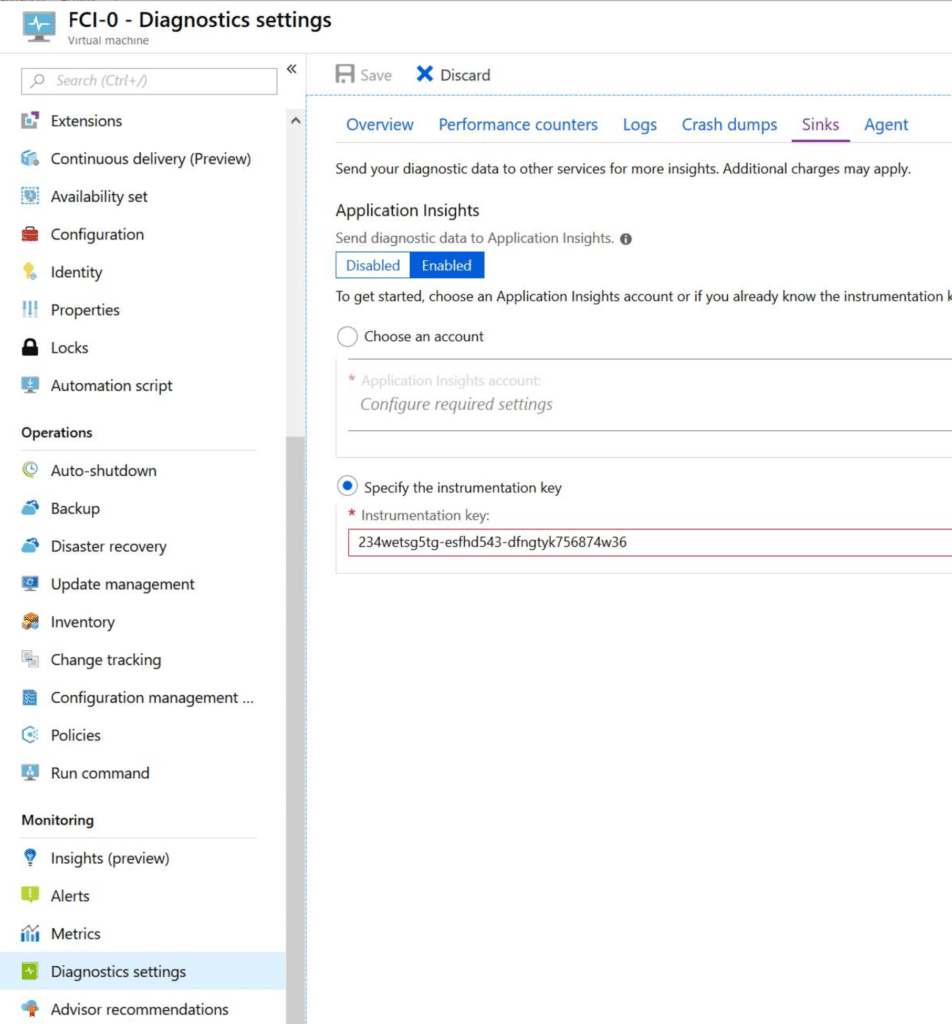

Setting up App insights as a VM is as easy as:

However for setting up against App Services, there is great documentation at docs.microsoft.com to learn how you use special tags to monitor the code.

After some due diligence, configuring memory threshold limitations on the SQL cluster to help eliminate reoccuring memory pressure events. While this did help relieve a few memory management issues, upon continued investigation we identified a few memory issues that were the result of unhandled exceptions in the code.

Although the failed methods reported by the developers were stating connection errors, the actual exceptions were throwing various logic issues. Manifesting as closed connections in some cases, three examples of which are obfuscated and shown below.

SQL with Azure App Insights Sample 1: Referenced Database does not exist; causes a 1.3 second timeout

CUSTOMER-USE-APP-API Log Query:

// All telemetry for Operation ID: xxx-yyy1

union *

// Apply filters

| where timestamp > datetime("2019-03-05T19:38:26.111Z") and timestamp < datetime("2019-03-07T19:38:26.111Z")

| where operation_Id == "xxx-yyy1"

Failed method

System.Data.SqlClient.SqlConnection.OnError

Exception

System.Data.SqlClient.SqlException (0x80131904): Invalid object name 'XXX.YYYY'.

SQL with Azure App Insights Sample 2: Unhandled Deadlocked resource exceptions; causes a 3.4 minute timeout

CUSTOMER-USE-APP-API Log Query:

// All telemetry for Operation ID: xxx-yyy2

union *

// Apply filters

| where timestamp > datetime("2019-03-03T23:05:13.481Z") and timestamp < datetime("2019-03-05T23:05:13.481Z")

| where operation_Id == "xxx-yyy2"

Failed Method

System.Data.SqlClient.SqlConnection.OnError

Exception

System.Data.SqlClient.SqlException (0x80131904): The ROLLBACK TRANSACTION request has no corresponding BEGIN TRANSACTION.

SQL with Azure App Insights Sample 3: Circular reference causing a 1.3 second delay

CUSTOMER-USE-APP-API Log Query:

// All telemetry for Operation ID: xxx-yyy3

union *

// Apply filters

| where timestamp > datetime("2019-03-05T19:38:27.181Z") and timestamp < datetime("2019-03-07T19:38:27.181Z")

| where operation_Id == "xxx-yyy3"

Failed Method

Newtonsoft.Json.Serialization.JsonSerializerInternalWriter.CheckForCircularReference

Summary

Ultimately, a neutral look by Infused Innovations, paired with Azure AppInsights and our SQL expertise, moved the project forward. With the focus back on the structure and error trapping of the code, our client is confident that the SQL cluster has enough resources to run the platform.